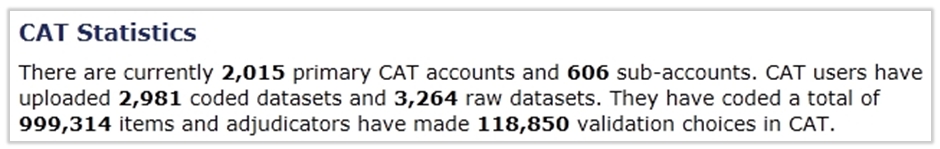

Texifter manages the Coding Analysis Toolkit (CAT), which is a free, open source, Web-based and FISMA-compliant system launched in the fall of 2007 and hosted by the University of Pittsburgh. CAT is the precursor to PCAT and DiscoverText.

This is a big day for the CAT team, as we are on the brink of recording the 1 millionth coding choice in the system.

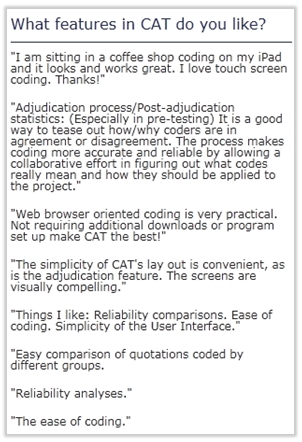

Why do people like to use this software? Certainly the price helps. However, over the years we have engineered CAT to make some of the most common coding and validation tasks easier. CAT uses a simple keystroke coding interface and predefined text spans to limit the pain caused by using a mouse.

More important to regular CAT users are the on-board tools for easily calculating multi-coder reliability. CAT simplifies the process of assigning the same coding task to a group of coders who can code asynchronously via the Web. When the coding is done, it is a simple matter to generate table of rater reliability stats for better understanding how different coders use the various codes. When pre-testing a new coding scheme, this on-the-fly measure of reliability is a key learning and training tool we use in QDAP all the time.

More important to regular CAT users are the on-board tools for easily calculating multi-coder reliability. CAT simplifies the process of assigning the same coding task to a group of coders who can code asynchronously via the Web. When the coding is done, it is a simple matter to generate table of rater reliability stats for better understanding how different coders use the various codes. When pre-testing a new coding scheme, this on-the-fly measure of reliability is a key learning and training tool we use in QDAP all the time.

Probably the most important innovation introduced by CAT is the adjudication module. The 118,850 adjudication choices recorded to date by CAT users grew out of our practice of comparing multi-coder experiments pen and paper. Aside from using lots of paper in big experiments, we found ourselves with a time-consuming challenge to transform our validation choices back into the software we were using at the time.

Validation in CAT allows an expert or consensus team to review coding choices one at a time and score them as valid or invalid. The system reports validity as a percentage by code, coder and project. This (often iterative) step is absolutely critical when training a coding team.