Immediately after the judge read the not guilty verdict in the high profile Casey Anthony criminal case, total internet traffic to major news outlets doubled, and most importantly, outsiders took to social media outlets to display their emotions and opinions. When this occurred, I used Discover Text to immediately begin scraping Facebook and Twitter feeds to archive public responses to what may be the most shocking verdict since O.J. Simpson.

My goal in importing the feeds was to capture the data, code for sentiment, train a classifier, classify the data, and formulate the percentage of people using social media to agree, disagree, or simply joke about the verdict. This situation, much like the death of Osama Bin Laden, posed the perfect situation for displaying the power of DiscoverText over unstructured social media data.

I set the feed scheduler to import public Facebook and Twitter feeds containing the keywords “Anthony” and “Legal System.” After my first feed ingestion, I had nearly 50,000 posts which I could analyze. In my next hour, I had doubled that. This feed will update every hour for the next 4 days. Over those 4 days, total content will consistently grow. Similar to my experiment using data collected from ESPN tweets, in this blog post I will discuss the amount of the data which I harvested in the first day, the diverse content of that data, the sentiment coding process, and the results I found after coding and classifying the data.

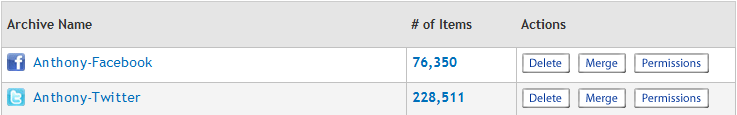

To have a better understanding of the general content, using 216,000 Anthony Twitter posts I had imported, I first generated a Tag Cloud using the newly improved Cloud Explorer feature, which displays the most used terms in an archive.

To have a better understanding of the general content, using 216,000 Anthony Twitter posts I had imported, I first generated a Tag Cloud using the newly improved Cloud Explorer feature, which displays the most used terms in an archive.

When studying the top 100 terms used, the high volume usage of “Casey” and “Anthony” certainly was not shocking. However, giving us more of an idea of where people’s minds are focused, over 10,000 people made a connection with the OJ Simpson trial. Nearly 4,000 people referenced Kim Kardashian, who had expressed a dissenting opinion of the verdict, ironically, her father defended “OJ.” Finally, adding to the many pop-culture references people thought to compare Ms. Anthony with Showtime serial killer Dexter Morgan, who was mentioned in over 3,000 posts.

When studying the top 100 terms used, the high volume usage of “Casey” and “Anthony” certainly was not shocking. However, giving us more of an idea of where people’s minds are focused, over 10,000 people made a connection with the OJ Simpson trial. Nearly 4,000 people referenced Kim Kardashian, who had expressed a dissenting opinion of the verdict, ironically, her father defended “OJ.” Finally, adding to the many pop-culture references people thought to compare Ms. Anthony with Showtime serial killer Dexter Morgan, who was mentioned in over 3,000 posts.

Analyzing the Tag Cloud revealed many of the posts to be jokes regarding the verdict. These posts could be interpreted as disagreement with the verdict; however, in the process of coding the data, I used 3 codes, “Agree,” “Disagree,” “Jokes,” and “Other.” Looking at the Cloud Tag, one can hypothesize that the Twitter community is highly against this verdict, and they are willing to joke about, something Jay Leno cant even get away with.

To train the classifier, I coded 500 tweets, and then classified a sample of 1000. I found accuracy by validating 200 randomly selected tweets. I found the accuracy of the classifier to be 75%. An accuracy of this rate is superb considering the wide array of Twitter data that had been imported.

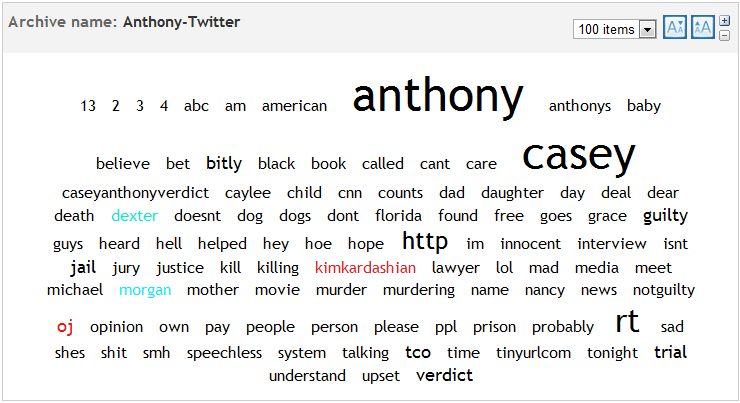

To vizualize breakdown by percentage, I used the Classifier Report, which gave me results very similar to what I suspected when coding. 46% of tweets disagreed with the verdict, 27% joked about the verdict, 21% tweeted something unrelated to about the verdict, and 4% agreed with the verdict.

To vizualize breakdown by percentage, I used the Classifier Report, which gave me results very similar to what I suspected when coding. 46% of tweets disagreed with the verdict, 27% joked about the verdict, 21% tweeted something unrelated to about the verdict, and 4% agreed with the verdict.

The amount of data collected, and the passionate responses illustrate that a small, but vocal segment of the population has been actively tweeting about this case. Using the tweets harvested by DiscoverText, and the sample which I experimented with, it can be concluded the majority certainly do not agree with the jury verdict.