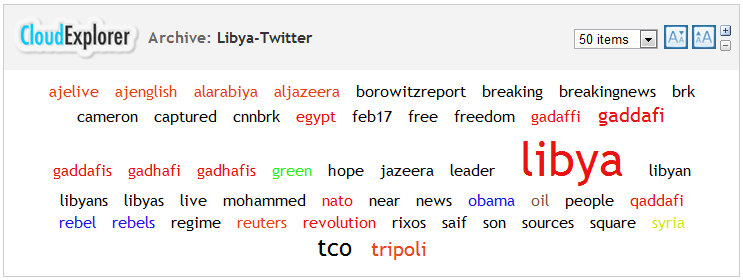

This weekend, opposition forces in Libya fought a ground war to oust long-standing dictator Col. Gaddafi. Simultaneously, a global news war also waged, as news sources sought to be the shining star in broadcasting the latest from Tripoli. Some of the best news came directly from Twitter, which, to no surprise, exploded with information as the opposition forces advanced. With this surge in information being reported on Libya, using DiscoverText, I began downloading Twitter feeds on Sunday evening, using the keywords “Libya,” and “Tripoli.”

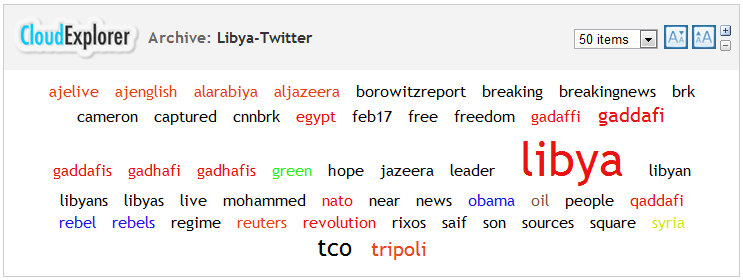

By 6AM the following morning, about 15 hours later, I had collected about 25,000 posts for both keywords. After lurking on Facebook and Twitter, and using the Tag Cloud to see

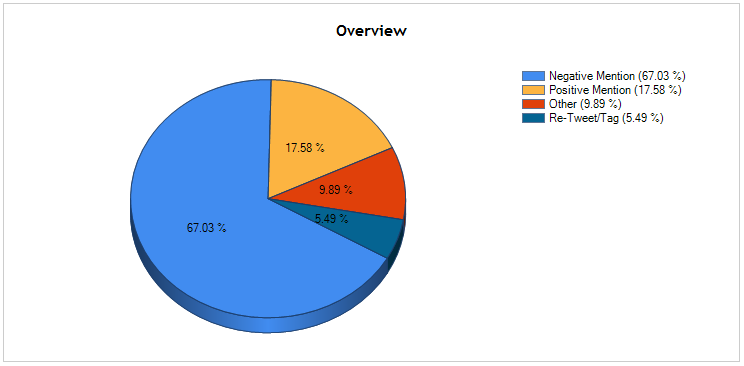

trends in the data, I had noticed many people seemed upset with the quality of the news coverage, especially the coverage from U.S. sources. Josh, my colleague, found that CNN is in need of a geography lesson. Using the collected posts, my goal was the find which global news source had the highest public satisfaction. To calculate this, I searched my “Libya” archive for major world news outlets, including, “CNN,” “Fox News,” “MSNBC,” “Al-Jazeera,” “Sky,” and “BBC.” I created individual buckets and datasets for each news source, and would code these for sentiment by using the coding scheme “Positive Mention,” “Negative Mention,” “Re-Tweet/Tag,(includes links)” and the “Other (includes general references, etc).”To perform this experiment, I would code 25% of each dataset, and then leave the rest up to the powerful Bayesian classifier built into DiscoverText.

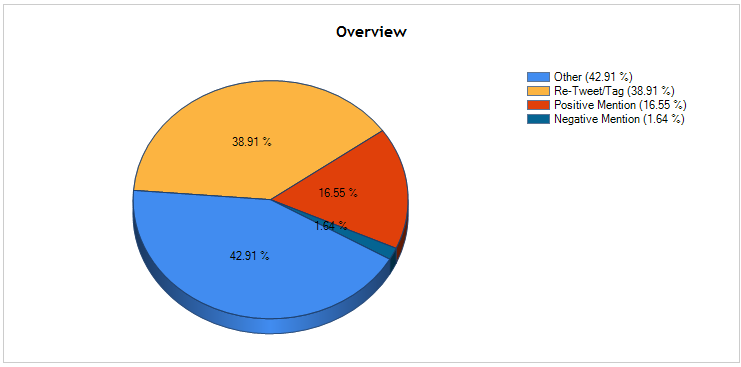

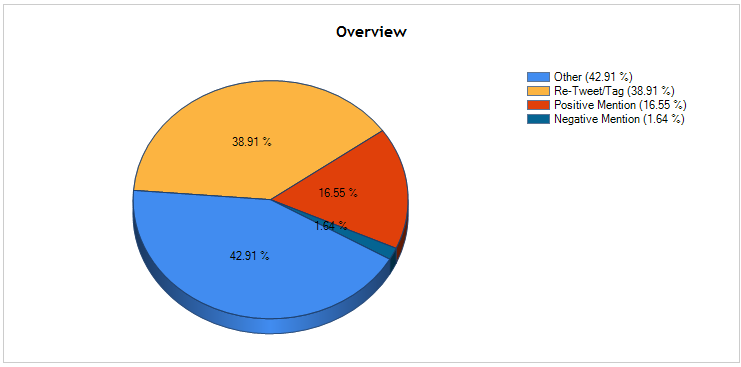

After coding 137 of the 550 “Al-Jazeera” tweets, I found the Doha, Qatar based news service to be heavily praised. With comments such as, “If Americans want to watch actual live coverage of Libya, they should download Al Jazeera English to their IPhones” and “The revolution is being televised on Al-Jazeera English. Even better than Sky. BBC nowhere,” Al-Jazeera clearly seemed to do an excellent job covering Libya. After classifying, the report held true to my experience in coding, and showed that most either re-tweeted Al-Jazeera tweets, or were positive about the news service.

Only slightly over 1% of the tweets were negative about the Al-Jazeera coverage, making it the news service to beat. Another positive for Al-Jazeera was the number of people who re-tweeted, or just mentioned the news service. This outpouring of support and recognition of Al-Jazeera by a mostly American audience should come as welcome news. The service is currently aggressively expanding its English offerings, and the will soon be on television sets throughout the United States under the name Al-Jazeera English.

The usually highly regarded British news service BBC seemed to heavily ridiculed not for its coverage, but its lack of urgency to cover the issue. When, coding, I noticed this from statements such as, “BBC America still running with Top Gear, Al Jazeera live from Benghazi,” and “More #BBC Journalists at Glastonbury than in #Libya? I want my money back.”

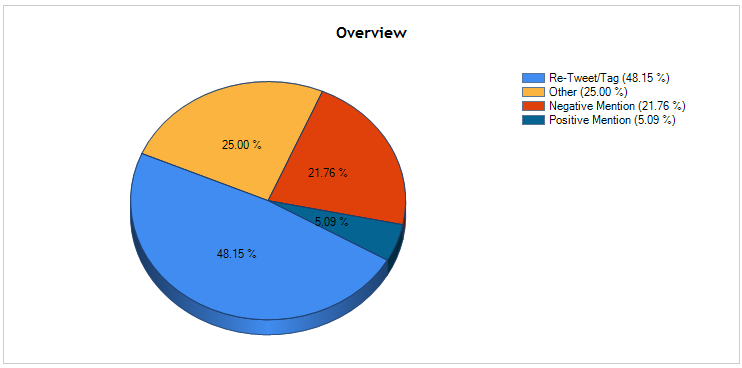

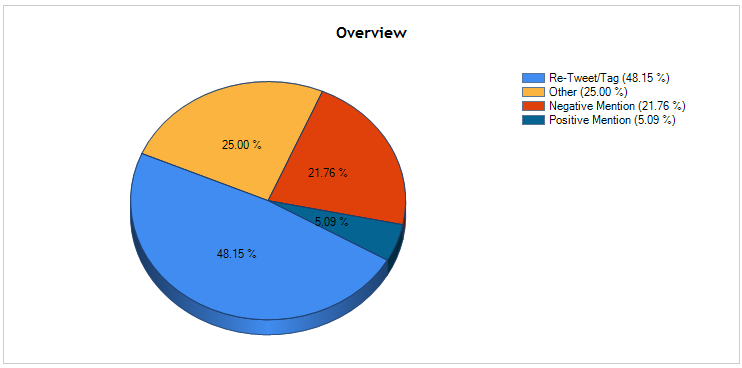

In the coding report, my thoughts were essentially mirrored, as over 21% of the tweets were negative. One positive for BBC was that nearly 50% of the tweets were re-tweets, meaning a fair amount of people use BBC as their news source, and are happy with the coverage. The moral for BBC, thanks to DiscoverText, should be, when the next Middle East uprising comes to fruition (Syria?), it would be wise for the BBC to turn off Top Gear and immediately switch to once-in-a-lifetime events to satisfy viewers.

In the coding report, my thoughts were essentially mirrored, as over 21% of the tweets were negative. One positive for BBC was that nearly 50% of the tweets were re-tweets, meaning a fair amount of people use BBC as their news source, and are happy with the coverage. The moral for BBC, thanks to DiscoverText, should be, when the next Middle East uprising comes to fruition (Syria?), it would be wise for the BBC to turn off Top Gear and immediately switch to once-in-a-lifetime events to satisfy viewers.

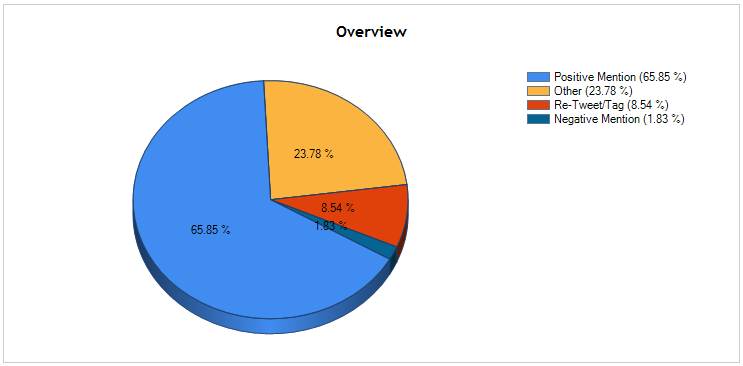

I also collected tweets which mentioned the other British news outlet, Sky Broadcasting. This outlet is not as well known as BBC, but according to posts, the organization made their mark when reporting on Libya. The chatter about Sky was overwhelmingly positive. Often, Sky was compared favorably with other world news outlets, with posts like, “Sky News and al Jazeera are making US cable coverage of #Libya look like Wayne’s World.”

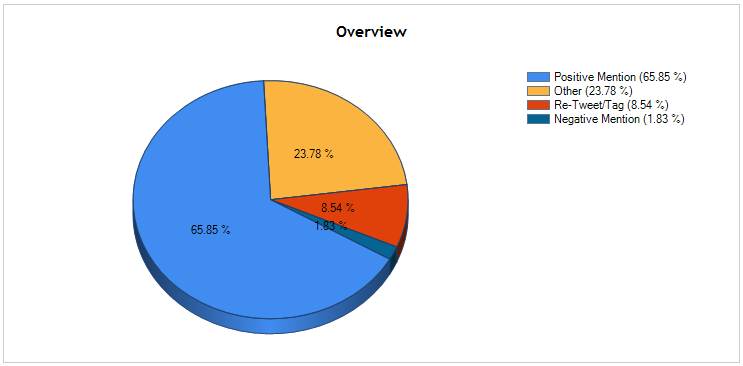

The classifier report revealed an astonishing 65% of mentions positive, a number only rivaled by Al-Jazeera. One, tweet which best described the relationship between the two noted the crisp, rivieting images coming from Sky, and the superb analysis from Al-Jazeera, suggesting the ultimate combination of analysis and images.

The classifier report revealed an astonishing 65% of mentions positive, a number only rivaled by Al-Jazeera. One, tweet which best described the relationship between the two noted the crisp, rivieting images coming from Sky, and the superb analysis from Al-Jazeera, suggesting the ultimate combination of analysis and images.

When coding the CNN data, the statements seemed to contain the most mixed sentiment. From the posts, it seems that CNN’s coverage appeared to be thrown together at the last minute, and factually inaccurate. Many people re-tweeted the post, “#Libya is not #Iraq..not begun by NATO, this; begun by the will of masses of Libyans. Somebody tell StevenCook on CNN to stop comparing to Bagdad.” Another example of people’s discontent with the CNN coverage was statements such as, “Sky News web coverage of Libya approximately 1,000% better than CNN’s.”

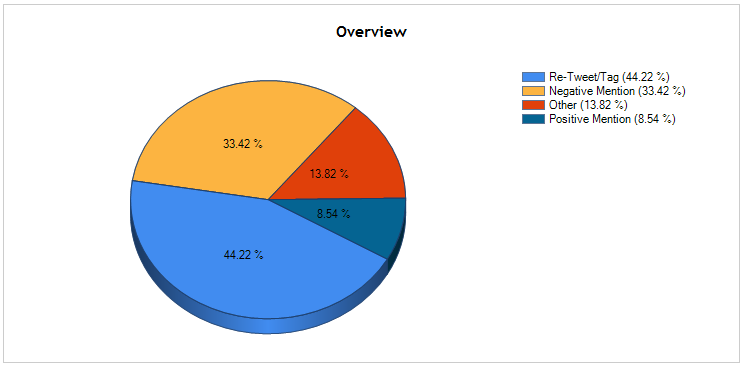

According to the classification report, over 30% of tweets regarding CNN were negative. This high number could be for a few reasons. CNN is commonly regarded at the best source for news in the U.S., and is watched by those more critical and knowledgeable about the subject. In the future, CNN might be wise to employ a Middle East expert to discuss the issues, instead of relying on the “janitor and the cleaning lady,” as one tweet described the Sunday late night coverage of the events unfolding in Tripoli.

According to the classification report, over 30% of tweets regarding CNN were negative. This high number could be for a few reasons. CNN is commonly regarded at the best source for news in the U.S., and is watched by those more critical and knowledgeable about the subject. In the future, CNN might be wise to employ a Middle East expert to discuss the issues, instead of relying on the “janitor and the cleaning lady,” as one tweet described the Sunday late night coverage of the events unfolding in Tripoli.

The other two U.S. news outlets, FoxNews and MSNBC provided considerably less material to analyze, with only 150 tweets between the two. When coding MSNBC, it seemed the only reason anyone mentioned MSNBC was in order to drag the network over the coals by giving the network dubious honors such as, “In fairness, though, I would respect the analysis of the bear on MSNBC more than John Bolton’s on Fox News.” It seems the network mainstay decided instead to show re-runs of Lock-Up and other reality news shows, instead of the covering Libya.

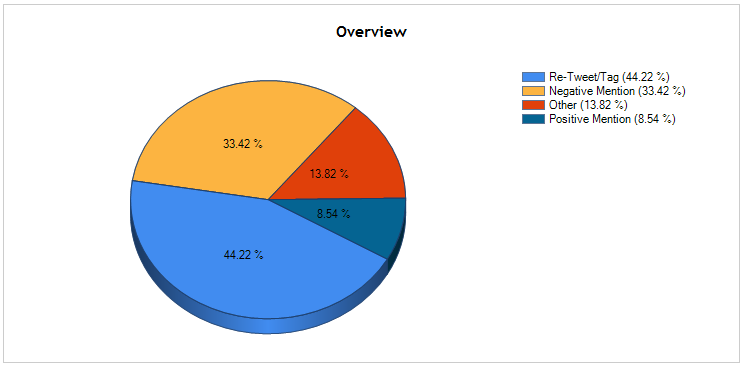

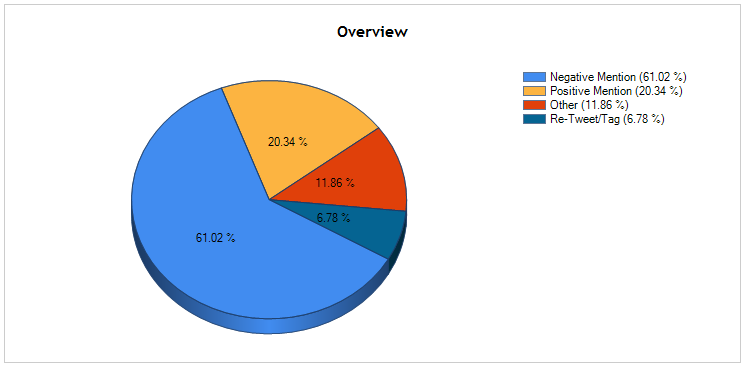

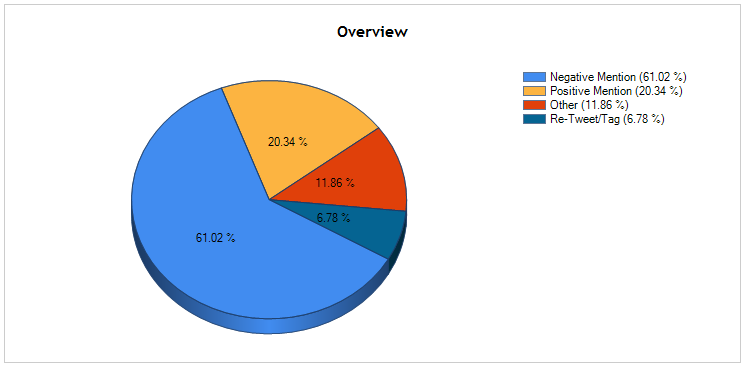

The classification report revealed that over 60% of the tweets about MSNBC were negative, much in-line with what I witnessed. Much of the same occurred with FoxNews, with the majority of the posts complaining about the often maligned conservative bias. This was displayed in tweets like, “So how do you think this plays out on Fox News and WSJ? Obama let al-Qaeda take over Libya?”

The classification report revealed that over 60% of the tweets about MSNBC were negative, much in-line with what I witnessed. Much of the same occurred with FoxNews, with the majority of the posts complaining about the often maligned conservative bias. This was displayed in tweets like, “So how do you think this plays out on Fox News and WSJ? Obama let al-Qaeda take over Libya?”

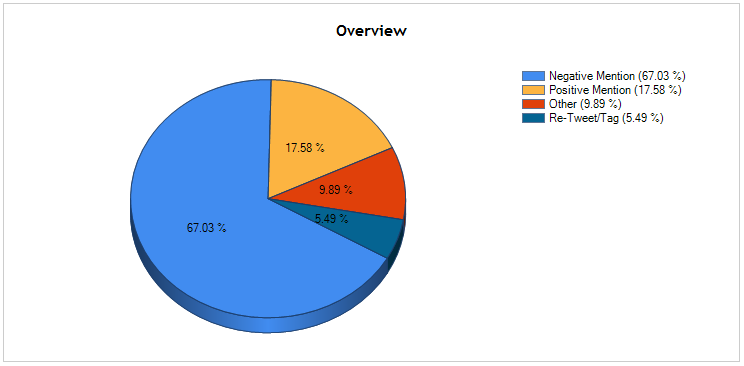

The anti-Fox stance became more evident when the classifier report displayed over 65% of the tweets negative about Fox’s coverage of Libya. Using the DiscoverText tools revealed the winners and losers in the Libya coverage. Al-Jazeera, and somewhat surprisingly, Sky Broadcasting, drew high marks from the tweeting public.

The anti-Fox stance became more evident when the classifier report displayed over 65% of the tweets negative about Fox’s coverage of Libya. Using the DiscoverText tools revealed the winners and losers in the Libya coverage. Al-Jazeera, and somewhat surprisingly, Sky Broadcasting, drew high marks from the tweeting public.

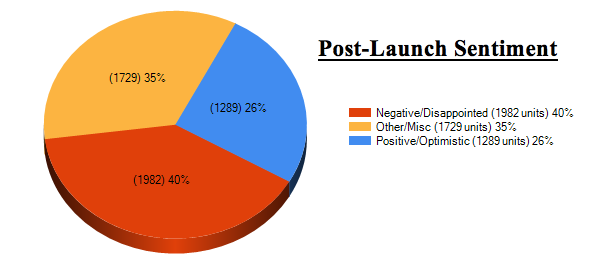

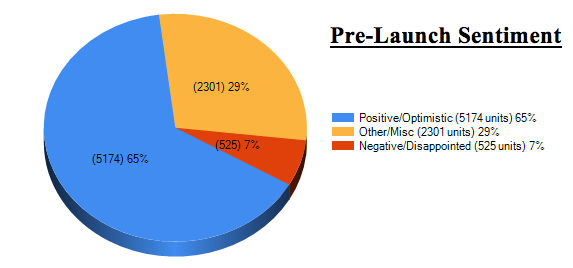

campaign or just bad P.R., the iPhone 4S launch was supposedly a disappointment for many, but how can we be so sure? Bloggers and journalists were clearly saddened, but for all we know, their unhappiness stems from the thousands of hits their blogs won’t receive and the millions of copies their papers won’t sell… If journalists and bloggers want to gauge sentiment, they should probably start with Social Media analysis like we do.

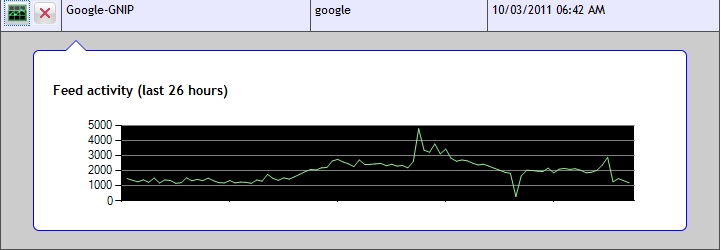

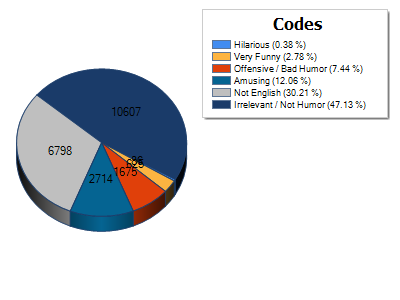

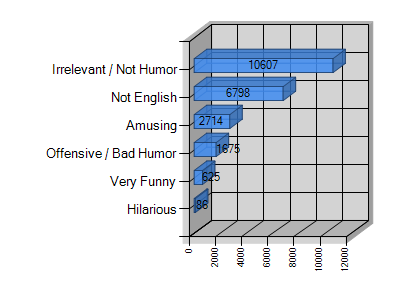

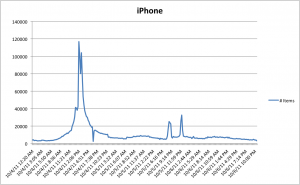

6th. All told, we collected about 3.1 million tweets. Prior to mid-morning on the 4th, we were ingesting about 4 iPhone tweets per second, but by late afternoon we were collecting about 130 tweets per second. By the morning of the 6th, social media chatter had settled-down a bit and leveled out to about 7 tweets per second. Our question was: what sentiment laid beneath this noise of jokes, links, advertisements, announcements, and irrelevance?….Only one way to find out: DiscoverText Classification.

6th. All told, we collected about 3.1 million tweets. Prior to mid-morning on the 4th, we were ingesting about 4 iPhone tweets per second, but by late afternoon we were collecting about 130 tweets per second. By the morning of the 6th, social media chatter had settled-down a bit and leveled out to about 7 tweets per second. Our question was: what sentiment laid beneath this noise of jokes, links, advertisements, announcements, and irrelevance?….Only one way to find out: DiscoverText Classification. We split our data into two subsets, the first contained only tweets prior to the launch that mentioned “iPhone,” whereas the second contained tweets a few hours after the launch. (This is because for a few hours after the launch, some people still didn’t know that iPhone 5 was not coming.) By quickly programming a sentiment classifier, we concluded that prior to the Phone 4S launch, 65% of the iPhone comments were positive, optimistic, or enthusiastic, and just a few hours later only 26% of the iPhone comments were positive and 40% of them were negative.

We split our data into two subsets, the first contained only tweets prior to the launch that mentioned “iPhone,” whereas the second contained tweets a few hours after the launch. (This is because for a few hours after the launch, some people still didn’t know that iPhone 5 was not coming.) By quickly programming a sentiment classifier, we concluded that prior to the Phone 4S launch, 65% of the iPhone comments were positive, optimistic, or enthusiastic, and just a few hours later only 26% of the iPhone comments were positive and 40% of them were negative.